December 2015

Please note that republishing this article in full or in part is only allowed under the conditions described here.

HTTP Evasions Explained - Part 9 - How To Fix Inspection

TL;DR

Since the first release of HTTP Evader the malware analysis in almost all of the tested firewalls, IPS, NGFW, UTM ... could be easily bypassed using uncommon or invalid responses from the web server. Some of these products provided fixes in the mean time and several other are still working on fixes. Some products might never be fully fixed.

But why did these products were affected at all? Even the devices which claimed to have Advanced Threat Protection (ATP)? This ninth part of the series about HTTP level evasions looks into the underlying problems of doing a reliable application level inspection, about the limitations of the different technologies used for application level inspection and shows how it can be done better.

The previous article in this series was Part 8 - Borderline Robustness and the next part is Part 10 - Lazy Browsers.

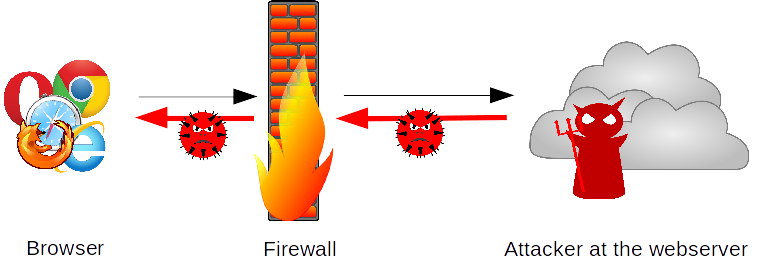

Attack Scenario

The scenario in the context of this article is the attacker at the web server and the victim using a browser, i.e. drive-by-download attacks and similar. The firewall should be there to protect the client. The attacker might have only partial control of the server, i.e. can use some PHP scripts to generate content. Or it might have full control and could even control the software running as the web server. The attacker aims to transport malware to the client so that the IPS will not detect it. This means I'm not talking here about protecting a server with a Web Application Firewall or protecting the client using URL blacklists.

Just Words: Firewall, IDS, IPS, NGFW, UTM, ....

There are lots of different words for the devices I talk about, ranging from the classical "Firewall", via Intrusion Detection Systems (IDS) and Intrusion Prevention Systems (IPS), Secure (Web) Gateways (SG, SWG) to today's Next Generation Firewalls (NGFW) or Unified Threat Management solutions (UTM). None of these words really describes a technology or quality and thus one will find lots of different technologies at different quality levels marketed with these names.

Today most devices which can do some kind of application level inspection are marketed as NGFW or UTM, which means that it is hard to associate any kind of quality with these names any more. Some vendors therefore try to top this by adding the Advanced Threat Protection (ATP) label which is again mostly marketing without any specific technology behind it.

Even if the label "firewall" or "IPS" is used in the following, I'm talking about the devices which claim to be the best, i.e. NGFW, UTM which mostly can do also SSL interception to inspect https connections and which are often labeled with Advanced Threat Protection or similar too.

Theoretical Limitations: Passive vs. Active Inspection

There are two basic technologies in application level analysis: passive and active inspection. While the first one is usually faster and needs less resources, it is more limited in the protection it can achieve.

Passive inspection only checks if the data looks good or potentially malicious. In the last case it throws an alert or terminates the connection while in the first case it simply lets the data pass through unchanged. A typical example for passive analysis are classical Intrusion Detection Systems like the open source IDS Snort. But based on the behavior I've seen with the tested products I'm sure that several of the commercial products also do passive analysis only.

The main problem for passive inspection is the gray area where the data is neither well formed nor does it look really bad. This happens when uncommon parts of the HTTP protocol or a slightly invalid protocol is used. Since browsers usually deal somehow with these kinds of problems it would be bad to block the data since this could result in broken websites. But it would not be a good idea to simply let all these data pass, because maybe the browser interprets these data different to the inspection device and thus malware slips through.

Thus passive inspection in security devices faces the same problems as anti-virus which also only does passive analysis: either it blocks too much (false positive) and break web sites or it blocks to few (false negative) and potentially lets malware through. And the current solution is also the same as in anti-virus, that is when in doubt it let the data pass. Because otherwise customers would complain too often about broken web sites.

Active inspection instead not only looks at the data but can also modify it. Thus it can in theory sanitize the protocol to make sure that only a fully sane protocol is spoken with the client, i.e. no uncommon or invalid protocol parts. This way it can achieve that the data as seen by the browser is the same as seen by the IPS and that the analysis can not by bypassed due to different interpretation of the data. Typical examples of active inspection are application level gateways, i.e. proxies. Several of the tested products seem to employ this technology.

Another advantage of active inspection is that one can already modify the request so that so that the response can be better analyzed. Since many browsers today not only support gzip and deflate compression, but also sdch (Chrome, Opera), lzma (Opera) and brotli (new with Firefox 44) a device with passive inspection need to be able to understand all these compression methods. Devices with active inspection instead can restrict the compression methods by modifying the Accept-Encoding header before forwarding the request, and can then reject a response which uses an unsupported compression method because the response is invalid for the sent request.

Practical Limitations: Accuracy of Inspection

HTTP looks like a simple protocol, but it has parts where interpretation has been changed between protocol revisions or parts where the full implications are not obvious. It also borrows heavily from mail but differs in some aspects. This results in lots of different implementations which agree in the core functionality but differ in the fine details. And in the same way browsers adhere to the protocol only in the commonly used cases, IPS differ in details too both from the browsers and from the specification.

Probably most of the HTTP implementations which can be found in the products are not designed with a protocol level attacker in mind. While I'm not able to look at the source code of the commercial products I had a look at the open source IDS Snort which is also often used in commercial products. And there they only pick the information the need and ignore everything else. This way they are not even aware that an attack is in process. Similar behavior can be seen when looking at the open source IDS Suricata and Bro.

Even worse is the behavior of IPS if they don't understand the data fully, like in case of unsupported compression schemes. Most IPS simply forward the data without further analysis if they don't know the compression scheme, because blocking might cause broken websites. The reasoning might be that customers will notice if something gets broken and will be annoyed, but they will usually not notice if the data simply don't get analyzed. This kind of thinking can also be seen with the reaction to obviously broken data, like gzip compressed data with wrong checksum. Several IPS simply forward these data even though they are not able to decompress the data for analysis.

How To Do It Better

I don't think a reliable inspection can be done with passive inspection only, at least not for an often abused protocol like HTTP. Invalid HTTP responses like having spaces inside the HTTP header field names can be seen even at the top web sites. There is a large variety of independently developed HTTP stacks starting from major web servers down to minimal implementations in routers and IoT devices. And since browsers are very forgiving regarding protocol errors developers don't even realize how broken their implementation is because it just works.

Active inspection offers a way to do be tolerant when receiving data with protocol violations, while at the same adhering strict to the protocol and using only commonly implemented features when sending the data. This way it guarantees that all clients protected by the IPS interpret the data in the same way as the the IPS and no bypass is possible. Or to say it with the Robustness Principle: " Be conservative in what you send, be liberal in what you accept". Passive inspection only can liberally accept the data but is not able to be conservative in sending, active inspection can do both.

Being conservative in sending provides another bonus: Even if the received data are interpreted in a wrong way by the IPS, the conservative sending will guarantee that they are interpreted in the same way in the web client. Thus it might break a web site in the worst case but it would never let malware pass to the client just because client and IPS interpret the protocol in a different way. For instance you can improve the security of several setups by putting a current squid web proxy in front of the IPS, so that the proxy sanitizes the response from web server before it gets analyzed and forwarded by the IPS. This way

Too Much Too Read, I Want To See Action

If you are curious how bad your browser or you IPS behave with uncommon or invalid responses from the web server head over to the the HTTP Evader test site. To read more about the details of specific bypasses go to the main description of HTTP Evader.